ALIS’22: Artificial Intelligence for Live Video Streaming

colocated with ACM Multimedia 2022

October 2022, Lisbon, Portugal

March 01-03, 2022 | Denver, CO, USA

After running as an independent event for several years, 2022 was the first year where Mile-High Video Conference (MHV) was organized by the ACM Special Interest Group on Multimedia (SIGMM). ACM MHV is a unique forum for participants from both industry and academia to present, share, and discuss innovations and best practices from multimedia content production to consumption.

This year, MHV hosted around 270 on-site participants and more than 2000 online participants from academia and industry. Five ATHENA members travelled to Denver, USA, to present two full papers and four short papers in MHV 2022.

Here is a list of full papers presented in MHV:

Super-resolution Based Bitrate Adaptation for HTTP Adaptive Streaming for Mobile Devices: The advancement of mobile hardware in recent years made it possible to apply deep neural network (DNN) based approaches on mobile devices. This paper introduces a lightweight super-resolution (SR) network deployed at mobile devices and a novel adaptive bitrate (ABR) algorithm that leverages SR networks at the client to improve the video quality. More …

Take the Red Pill for H3 and See How Deep the Rabbit Hole Goes: With the introduction of HTTP/3 (H3) and QUIC at its core, there is an expectation of significant improvements in Web-based secure object delivery. An important question is what H3 will bring to the table for such services. To answer this question, we present the new features of H3 and QUIC, and compare them to those of H/1.1/2 and TCP. More …

Here is a list of short papers presented in MHV:

RICHTER: hybrid P2P-CDN architecture for low latency live video streaming: RICHTER leverages existing works that have combined the characteristics of Peer-to-Peer (P2P) networks and CDN-based systems and introduced a hybrid CDN-P2P live streaming architecture. [PDF]

CAdViSE or how to find the sweet spots of ABR systems: CAdViSE provides a Cloud-based Adaptive Video Streaming Evaluation framework for the automated testing of adaptive media players. [PDF]

Video streaming using light-weight transcoding and in-network intelligence: LwTE reduces HTTP Adaptive Streaming (HAS) streaming costs by enabling lightweight transcoding at the edge. [PDF]

Efficient bitrate ladder construction for live video streaming: This paper introduces an online bitrate ladder construction scheme for live video streaming applications using Discrete Cosine Transform (DCT)-energy-based low-complexity spatial and temporal features. [PDF]

Elsevier Computer Communications journal

[PDF]

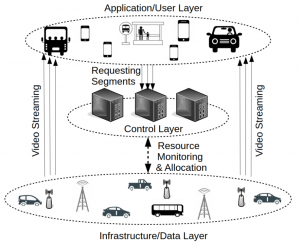

Alireza Erfanian (Alpen-Adria-Universität Klagenfurt), Farzad Tashtarian (Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt).

Abstract: Recent advances in embedded systems and communication technologies enable novel, non-safety applications in Vehicular Ad Hoc Networks (VANETs). Video streaming has become a popular core service for such applications. In this paper, we present QoCoVi as a QoE- and cost-aware adaptive video streaming approach for the Internet of Vehicles (IoV) to deliver video segments requested by mobile users at specified qualities and deadlines. Considering a multitude of transmission data sources with different capacities and costs, the goal of QoCoVi is to serve the desired video qualities with minimum costs. By applying Dynamic Adaptive Streaming over HTTP (DASH) principles, QoCoVi considers cached video segments on vehicles equipped with storage capacity as the lowest-cost sources for serving requests.

We design QoCoVi in two SDN-based operational modes: (i) centralized and (ii) distributed. In centralized mode, we can obtain a suitable solution by introducing a mixed-integer linear programming (MILP) optimization model that can be executed on the SDN controller. However, to cope with the computational overhead of the centralized approach in real IoV scenarios, we propose a fully distributed version of QoCoVi based on the proximal Jacobi alternating direction method of multipliers (ProxJ-ADMM) technique. The effectiveness of the proposed approach is confirmed through emulation with Mininet-WiFi in different scenarios.

We design QoCoVi in two SDN-based operational modes: (i) centralized and (ii) distributed. In centralized mode, we can obtain a suitable solution by introducing a mixed-integer linear programming (MILP) optimization model that can be executed on the SDN controller. However, to cope with the computational overhead of the centralized approach in real IoV scenarios, we propose a fully distributed version of QoCoVi based on the proximal Jacobi alternating direction method of multipliers (ProxJ-ADMM) technique. The effectiveness of the proposed approach is confirmed through emulation with Mininet-WiFi in different scenarios.

Every minute, more than 500 hours of video material are published on YouTube. These days, moving images account for a vast majority of data traffic, and there is no end in sight. This means that technologies that can improve the efficiency of video streaming are becoming all the more important. This is exactly what Hadi Amirpourazarian is working on in the Christian Doppler Laboratory ATHENA at the University of Klagenfurt. Read the full article here.

As Valentine’s day gift to video coding enthusiasts across the globe, we released Video Complexity Analyzer (VCA) version 1.0 open-source software on Feb 14, 2022. The primary objective of VCA is to become the best spatial and temporal complexity predictor for every frame/ video segment/ video which aids in predicting encoding parameters for applications like scene-cut detection and online per-title encoding. VCA leverages x86 SIMD and multi-threading optimizations for effective performance. While VCA is primarily designed as a video complexity analyzer library, a command-line executable is provided to facilitate testing and development. We expect VCA to be utilized in many leading video encoding solutions in the coming years.

VCA is available as an open-source library, published under the GPLv3 license. For more details, please visit the software online documentation here. The source code can be found here.

Heatmap of spatial complexity (E)

Heatmap of temporal complexity (h)

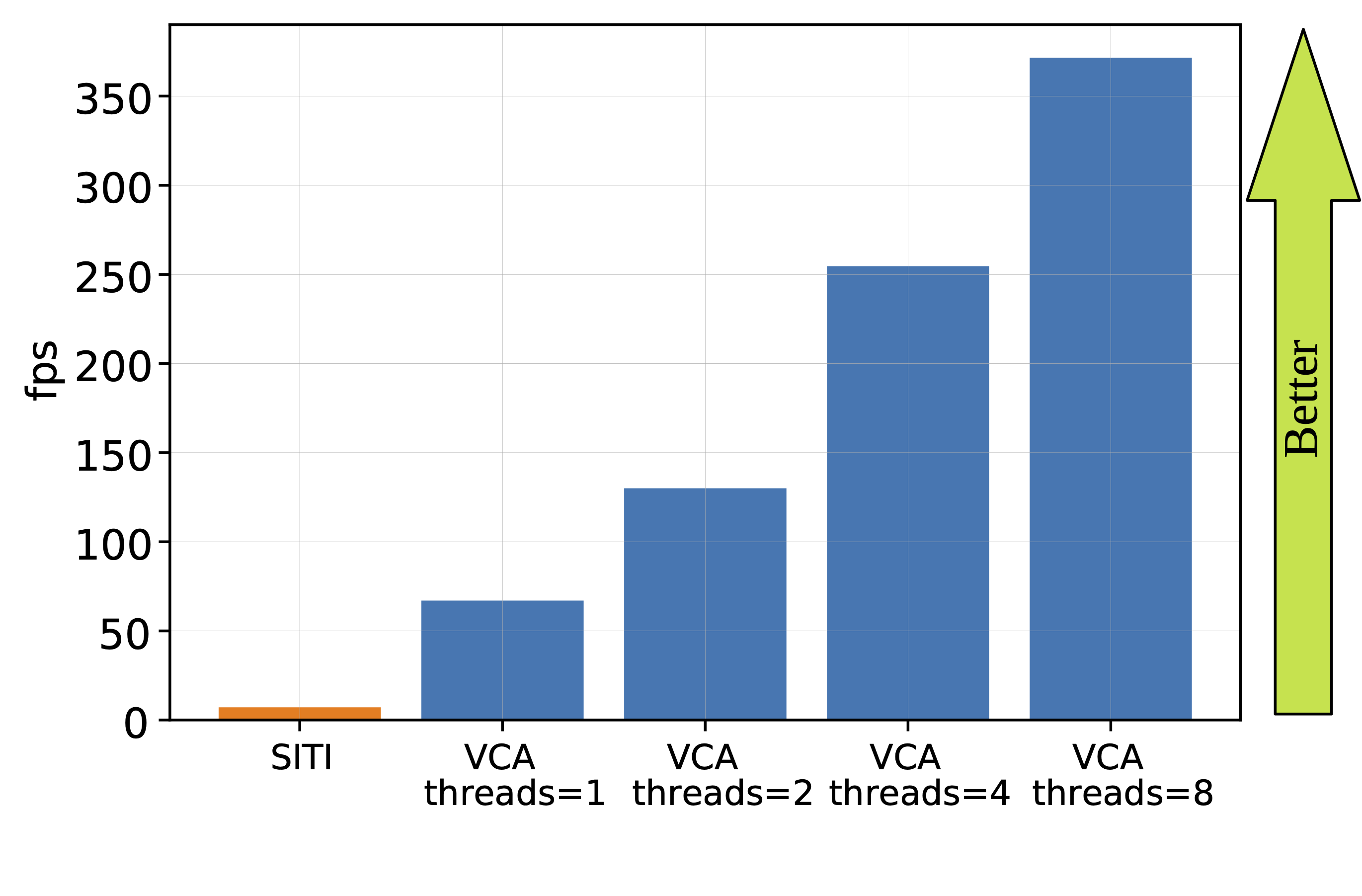

A performance comparison (frames analyzed per second) of VCA (with different levels of threading enabled) compared to Spatial Information/Temporal Information (SITI) [Github] is shown below:

Further information about a few possible VCA applications can be found at:

April 24-26, 2022 | Las Vegas, US

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Christian Feldmann (Bitmovin, Klagenfurt),

Adithyan Ilangovan (Bitmovin, Klagenfurt), Martin Smole (Bitmovin, Klagenfurt), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

Abstract:

Current per-title encoding schemes encode the same video content at various bitrates and spatial resolutions to find optimal bitrate-resolution pairs (known as bitrate ladder) for each video content in Video on Demand (VoD) applications. But in live streaming applications, a fixed bitrate ladder is used for simplicity and efficiency to avoid the additional latency to find the optimized bitrate-resolution pairs for every video content. However, an optimized bitrate ladder may result in (i) decreased storage or network resources or/and (ii) increased Quality of Experience (QoE). In this paper, a fast and efficient per-title encoding scheme (Live-PSTR) is proposed tailor-made for live Ultra High Definition (UHD) High Framerate (HFR) streaming. It includes a pre-processing step in which Discrete Cosine Transform (DCT)-energy-based low-complexity spatial and temporal features are used to determine the complexity of each video segment, based on which the optimized encoding resolution and framerate for streaming at every target bitrate is determined. Experimental results show that, on average, Live-PSTR yields bitrate savings of 9.46% and 11.99% to maintain the same PSNR and VMAF scores, respectively compared to the HTTP Live Streaming (HLS) bitrate ladder.

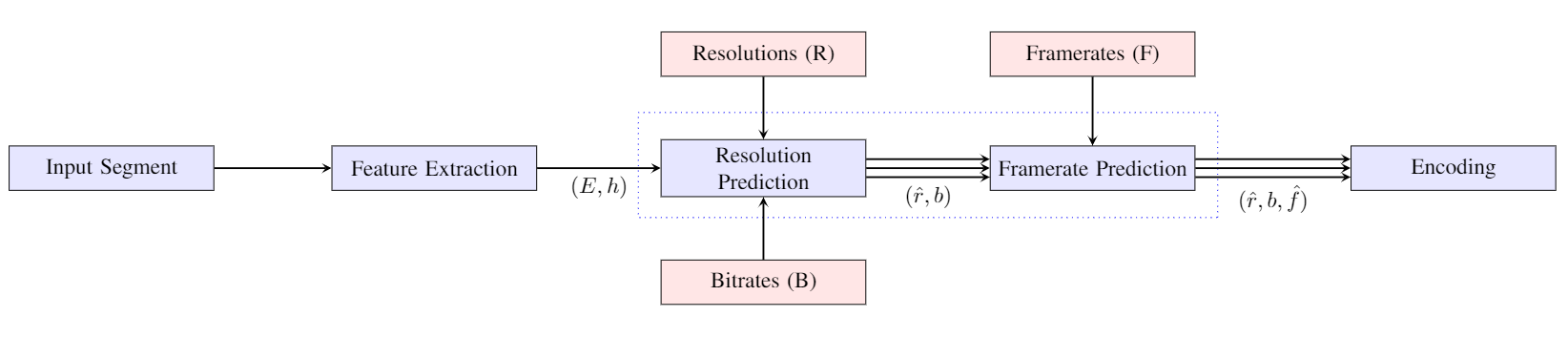

Architecture of Live-PSTR

The Emmy® Awards do not only honour the work of actors and directors but also recognize technologies that are steadily improving the viewing experience for consumers.

The Emmy® Awards do not only honour the work of actors and directors but also recognize technologies that are steadily improving the viewing experience for consumers.

This year, the winners include the MPEG DASH Standard. Christian Timmerer (Department of Information Technology) played a leading role in its development.

Read more about it here.