Video Complexity Analyzer (VCA) and Video Complexity Dataset (VCD) were presented by Hadi Amirpour and Vignesh Menon in the NORM session of the VQEG meeting on 11 May 2022, in Rennes, France.

The presentation video of VCA is available here.

Video Complexity Analyzer (VCA) and Video Complexity Dataset (VCD) were presented by Hadi Amirpour and Vignesh Menon in the NORM session of the VQEG meeting on 11 May 2022, in Rennes, France.

The presentation video of VCA is available here.

CHIST-ERA Conference 2022 [Website][Abstract]

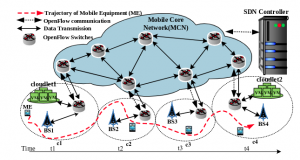

Empowered by today’s rich tools for media generation and collaborative production and the convenient wireless access (e.g., WiFi and cellular networks) to the Internet, crowdsourced live streaming over wireless networks have become very popular. However, crowdsourced wireless live streaming presents unique video delivery challenges that make a difficult tradeoff among three core factors: bandwidth, computation/storage, and latency. However, the resources available for these non-professional live streamers (e.g., radio channel and bandwidth) are limited and unstable, which potentially impairs the streaming quality and viewers’ experience. Moreover, the diverse live interactions among the live streamers and viewers can further worsen the problem. Leveraging recent technologies like Software-defined Networking (SDN), Network Function Virtualization (NFV), Mobile Edge Computing (MEC), and 5G facilitate providing crowdsourced live streaming applications for mobile users in wireless networks. However, there are still some open issues to be addressed. One of the most critical problems is how to allocate an optimal amount of resources in terms of bandwidth, computation power, and storage to meet the required latency while increasing the perceived QoE by the end-users. Due to the NP-complete nature of this problem, machine learning techniques have been employed to optimize various items on the streaming delivery paths from the streamers to the end-users joining the network through wireless links. Furthermore, to tackle the scalability issue, we need to push forward our solutions toward distributed machine learning techniques. In this short talk, we are going first to introduce the main issues and challenges of the current crowdsourced live streaming system over wireless networks and then highlight the opportunities of leveraging machine learning techniques in different parts of the video streaming path ranging from encoder and packaging algorithms at the streamers to the radio channel allocation module at the viewers, to enhance the overall QoE with a reasonable resource cost.

Elsevier Computer Communications Journal

[PDF]

Shirzad Shahryari (Ferdowsi University of Mashhad, Mashhad, Iran), Farzad Tashtarian (AAU, Austria), Seyed-Amin Hosseini-Seno (Ferdowsi University of Mashhad, Mashhad, Iran).

Abstract: Edge Cloud Computing (ECC) is a new approach for bringing Mobile Cloud Computing (MCC) services closer to mobile users in order to facilitate the complicated application execution on resource-constrained mobile devices. The main objective of the ECC solution with the cloudlet approach is mitigating the latency and augmenting the available bandwidth. This is basically done by deploying servers (a.k.a ”cloudlets”) close to the user’s device on the edge of the cellular network. Once the user requests mount, the resource constraints in a cloudlet will lead to resource shortages. This challenge, however, can be overcome using a network of cloudlets for sharing their resources. On the other hand, when considering the users’ mobility along with the limited resource of the cloudlets serving them, the user-cloudlet communication may need to go through multiple hops, which may seriously affect the communication delay between them and the quality of services (QoS).

The importance of remote communication is becoming more and more important in particular after COVID-19 crisis. However, to bring a more realistic visual experience, more than the traditional two-dimensional (2D) interfaces we know today is required. Immersive media such as 360-degree, light fields, point cloud, ultra-high-definition, high dynamic range, etc. can fill this gap. These modalities, however, face several challenges from capture to display. Learning-based solutions show great promise and significant performance in improving traditional solutions in addressing the challenges. In this special session, we will focus on research works aimed at extending and improving the use of learning-based architectures for immersive imaging technologies.

Paper Submissions: 6th June, 2022

Paper Notifications: 11th July, 2022

June 26-29, 2022 | Nafplio, Greece

Ekrem Çetinkaya (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Minh Nguyen (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), and Christian Timmerer (Christian Doppler LaboratoryATHENA, Alpen-Adria-Universität Klagenfurt)

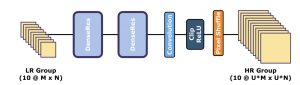

Abstract: Video is now an essential part of the Internet. The increasing popularity of video streaming on mobile devices and the improvement in mobile displays brought together challenges to meet user expectations. Advancements in deep neural networks have seen successful applications on several computer vision tasks such as super-resolution (SR). Although DNN-based SR methods significantly improve over traditional methods, their computational complexity makes them challenging to apply on devices with limited power, such as smartphones. However, with the improvement in mobile hardware, especially GPUs, it is now possible to use DNN based solutions, though existing DNN based SR solutions are still too complex. This paper proposes LiDeR, a lightweight video SR network specifically tailored toward mobile devices. Experimental results show that LiDeR can achieve competitive SR performance with state-of-the-art networks while improving the execution speed significantly, i.e., 267 % for X4 upscaling and 353 % for X2 upscaling compared to ESPCN.

Keywords: Super-resolution, Mobile machine learning, Video super-resolution.

2022 IEEE International Conference on Multimedia and Expo (ICME) Industry & Application Track

July 18-22, 2022 | Taipei, Taiwan

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Christian Feldmann (Bitmovin, Austria), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

Abstract:

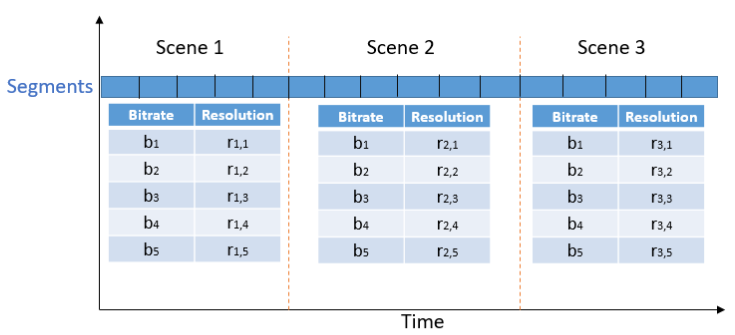

In live streaming applications, typically a fixed set of bitrate-resolution pairs (known as a bitrate ladder) is used during the entire streaming session in order to avoid the additional latency to find scene transitions and optimized bitrate-resolution pairs for every video content. However, an optimized bitrate ladder per scene may result in (i) decreased

storage or delivery costs or/and (ii) increased Quality of Experience (QoE). This paper introduces an Online Per-Scene Encoding (OPSE) scheme for adaptive HTTP live streaming applications. In this scheme, scene transitions and optimized bitrate-resolution pairs for every scene are predicted using Discrete Cosine Transform (DCT)-energy-based low-complexity spatial and temporal features. Experimental results show that, on average, OPSE yields bitrate savings of upto 48.88% in certain scenes to maintain the same VMAF,

compared to the reference HTTP Live Streaming (HLS) bitrate ladder without any noticeable additional latency in streaming.

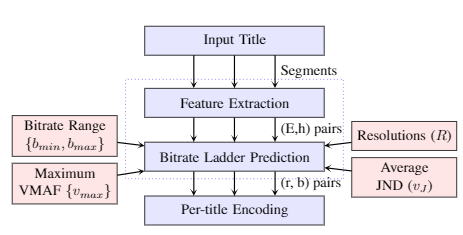

The bitrate ladder prediction envisioned using OPSE.

2022 IEEE International Conference on Multimedia and Expo (ICME)

July 18-22, 2022 | Taipei, Taiwan

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

Abstract:

In live streaming applications, typically a fixed set of bitrate-resolution pairs (known as bitrate ladder) is used for simplicity and efficiency in order to avoid the additional encoding run-time required to find optimum resolution-bitrate pairs for every video content. However, an optimized bitrate ladder may result in (i) decreased storage or delivery costs or/and (ii) increased Quality of Experience (QoE). This paper introduces a perceptually-aware per-title encoding (PPTE) scheme for video streaming applications. In this scheme, optimized bitrate-resolution pairs are predicted online based on Just Noticeable Difference (JND) in quality perception to avoid adding perceptually similar representations in the bitrate ladder. To this end, Discrete Cosine Transform(DCT)-energy-based low-complexity spatial and temporal features for each video segment are used. Experimental results show that, on average, PPTE yields bitrate savings of 16.47% and 27.02% to maintain the same PSNR and VMAF, respectively, compared to the reference HTTP Live Streaming (HLS) bitrate ladder without any noticeable additional latency in streaming accompanied by a 30.69% cumulative decrease in storage space for various representations.

Architecture of PPTE