In July-August 2022, the ATHENA Christian Doppler Laboratory hosted four interns working on the following topics:

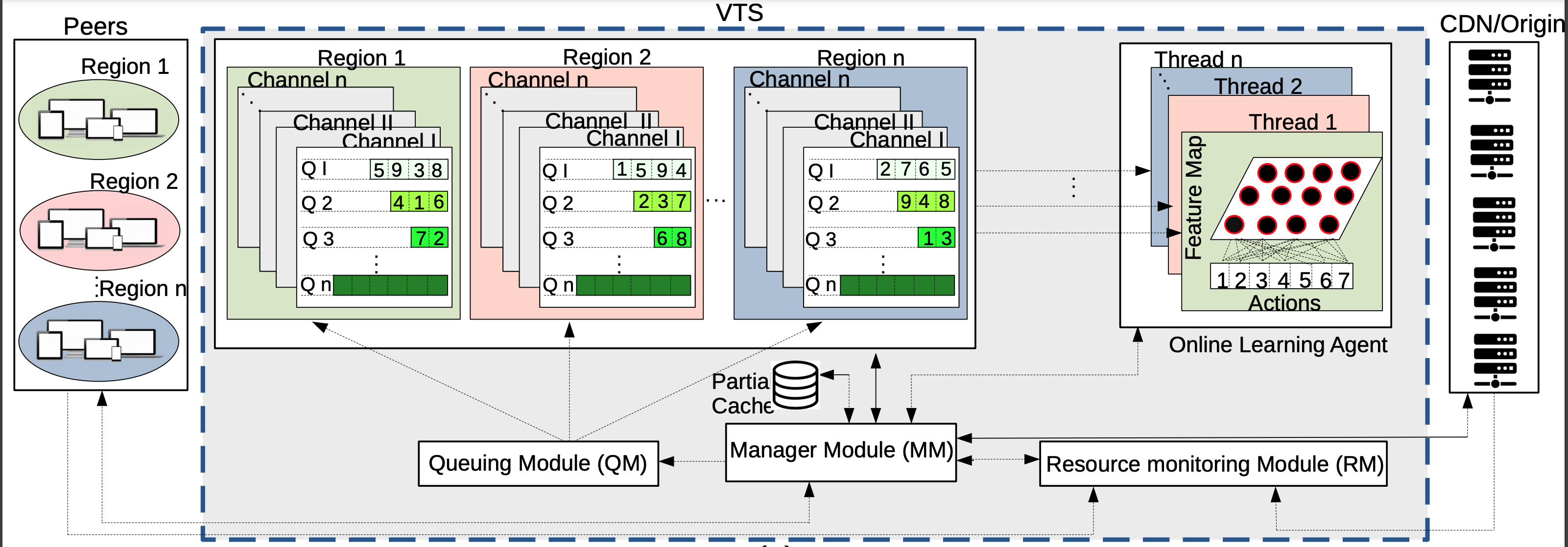

- Fabio Zinner: A Study and Evaluation on HTTP Adaptive Video Streaming using Mininet

- Moritz Pecher: Dataset Creation and HAS Basics

- Per-Luca Thalmann: Codec-war: is it necessary? Welcome to the multi-codec world

- Georg Kelih: Server Client Simulator for QoE with practical Implementation

At the end of their internships, they presented their works and achieved results, and received official certificates from the university. We believe the joint work with them was beneficial for both the laboratory and the interns. We would like to thank the interns for their genuine interest, productive work, and excellent feedback about our laboratory.

Fabio Zinner: “my four weeks, I had an amazingly practical and theoretical experience which is very important for my future practical and academic line of work! It was great and fascinating working with Python, Mininet, Linux, FFMpeg, Gpac, Iperf, etc. I really liked working with ATHENA, and the experience I gathered was exceptional. Also, I am very happy that I had Reza Farahani as my supervisor!”

Per-Luca Thalmann:“I really enjoyed my 4 weeks at ATHENA. At first, I had to read a lot of articles and papers to get a basic understanding of Video Codecs and encoding. As I started my Main Project, which evaluated the performance of modern codecs with different video complexities, I noticed that everything I had read before was useful to progress faster towards my end goal. After I got the results of my script, which ran for over a week, I also noticed some outcomes which were not expected. Basically, that an older codecs get at some very specific settings higher Scores than his successor. Whenever I got stuck or had any questions, my supervisor, Vignesh, helped me. I did not only improve my technical knowledge, I also got a lot insights into how research works, what is the motivation of research and also about the process for scientific research.”

Georg Kelih:“I worked by Athena as an Intern for a month and got the tasks to build a simulator which simulates the server client communication (ABR, bitrate ladder, resource allocation) and shows the results in a graph and a Server Client script where the server runs on the local host and the client requests segments and plays them using python-vlc

My daily routine was pretty chill, not only because we had only 30 hours to work, but also the programming was quite fun and challenging. So my day looked something like this I stand up go to work play a round table soccer and then start to work start Visual Studio Code and write the code I thought about yesterday hope that it runs, but it shows you just a few error messages start debugging then notice that it’s already time to eat something and that I am hungry, eat something and find finally after your lunch the silly error I made think about new implementation and better ways to solve something and then it is already time to go, so you go to the strandbad to swim a round and then drive home. Something like this, my daily routine looked like. For me, I think it was a bit too chill for my taste because I like the stress of a 40-hour week especially when I only work in my holidays.

But the rest was absolutely nice, especially that here by Athena are so many people from different countries is pretty cool. For my self, I learned not many new skills, but I found out about many new Linux tools and how to find information even more efficiently.”

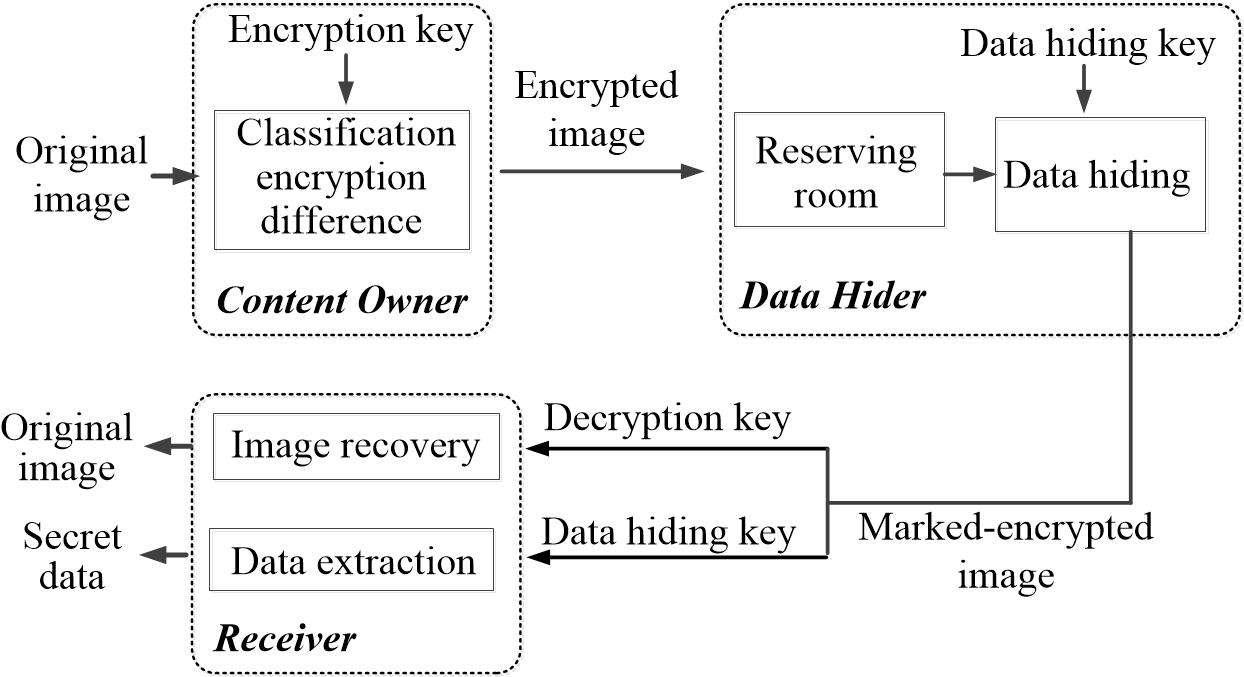

The secret data is embedded in the multiple most significant bits of the encoded difference. Experimental results show that the embedding capacity of the proposed algorithm is improved compared with the state-of-the-art algorithm.

The secret data is embedded in the multiple most significant bits of the encoded difference. Experimental results show that the embedding capacity of the proposed algorithm is improved compared with the state-of-the-art algorithm.

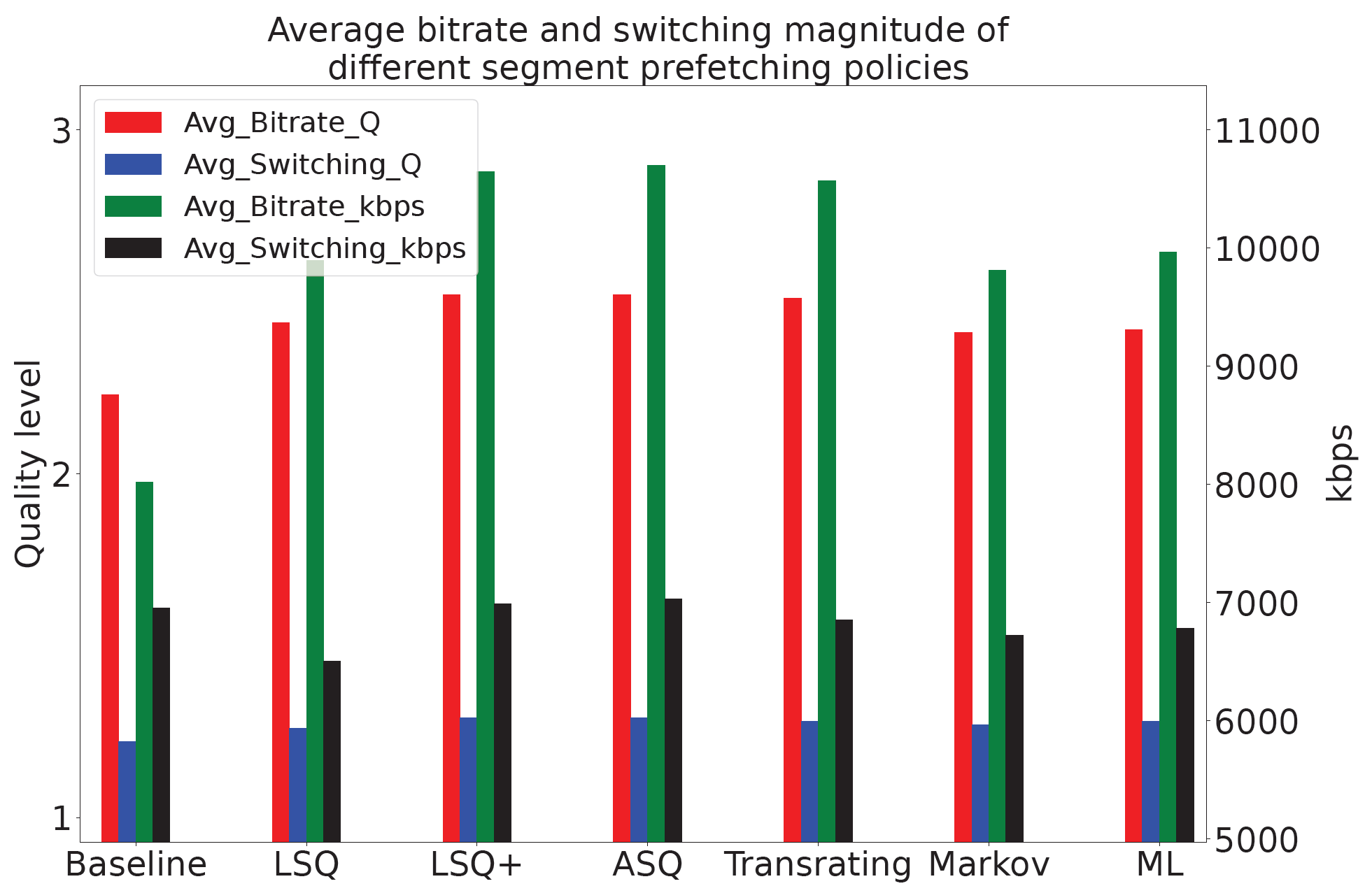

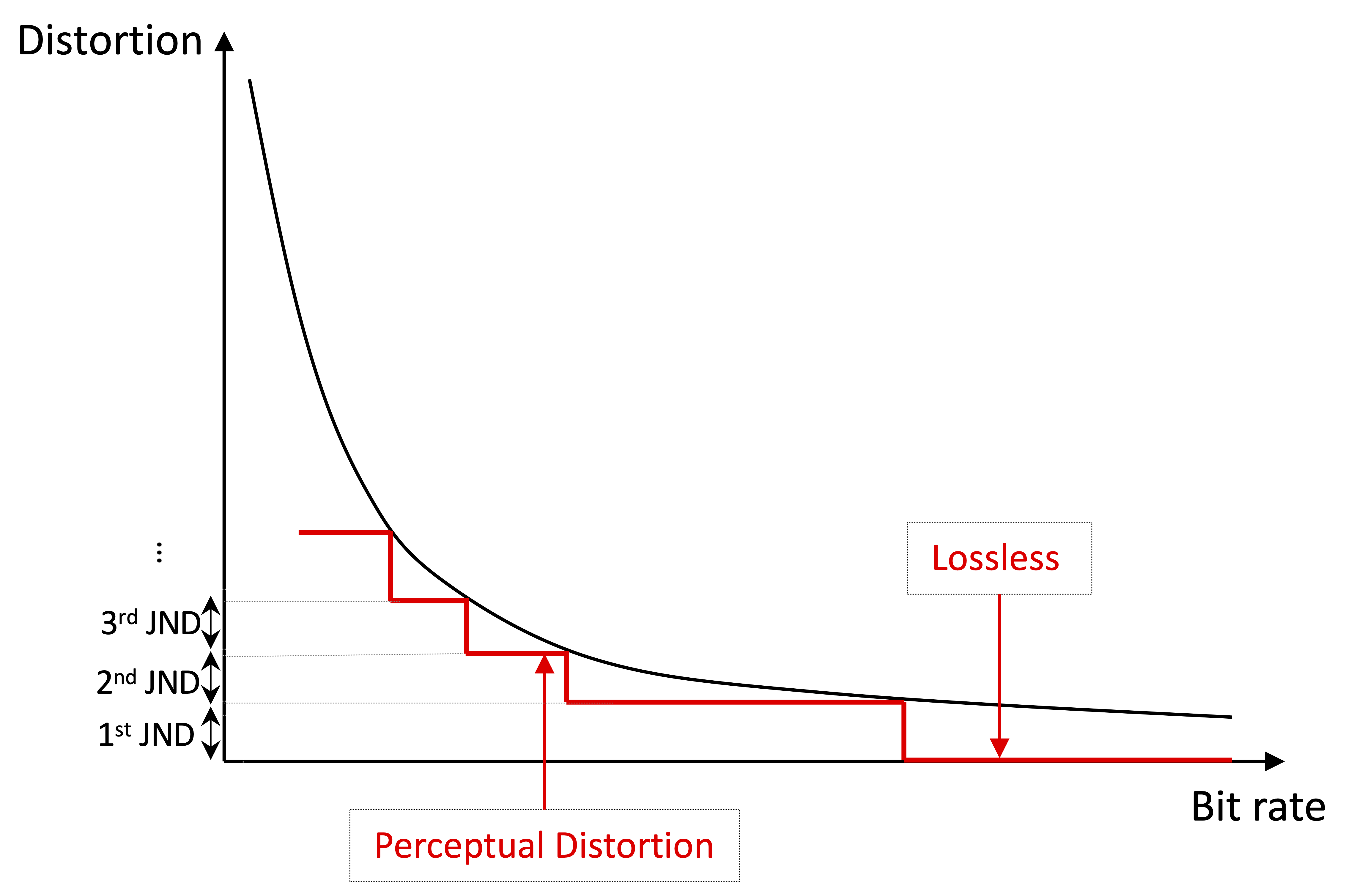

optimization and reduction of video delivery costs. Per-Title encoding, in contrast to a fixed bitrate ladder, shows significant promise to deliver higher quality video streams by addressing the trade-off between compression efficiency and video characteristics such as resolution and frame rate.

optimization and reduction of video delivery costs. Per-Title encoding, in contrast to a fixed bitrate ladder, shows significant promise to deliver higher quality video streams by addressing the trade-off between compression efficiency and video characteristics such as resolution and frame rate.