ACM Multimedia Conference – OSS Track

10-14 October 2022 | Lisbon, Portugal

[PDF]

Abdelhak Bentaleb (National University of Singapore), Zhengdao Zhan (National University of Singapore), Farzad Tashtarian (AAU, Austria), May Lim (National University of Singapore), Saad Harous (University of Sharjah), Christian Timmerer (AAU, Austria), Hermann Hellwagner (AAU, Austria), and Roger Zimmermann (National University of Singapore)

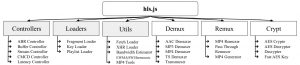

Low latency live streaming over HTTP using Dynamic Adaptive Streaming over HTTP (LL-DASH) and HTTP Live Streaming} (LL-HLS) has emerged as a new way to deliver live content with respectable video quality and short end-to-end latency. Satisfying these requirements while maintaining viewer experience in practice is challenging, and adopting conventional adaptive bitrate (ABR) schemes directly to do so will not work. Therefore, recent solutions including LoL$^+$, L2A, Stallion, and Llama re-think conventional ABR schemes to support low-latency scenarios. These solutions have been integrated with dash.js that support LL-DASH. However, their performance in LL-HLS remains in question. To bridge this gap, we implement and integrate existing LL-DASH ABR schemes in the hls.js video player which supports LL-HLS.

Moreover, a series of real-world trace-driven experiments have been conducted to check their efficiency under various network conditions including a comparison with results achieved for LL-DASH in dash.js.

Abstract: In general, manipulated videos will eventually undergo recompression. Video transcoding will occur when the standard of recompression is different from the prior standard. Therefore, as a special sign of recompression, video transcoding can also be considered evidence of forgery in video forensics. In this paper, we focus on the detection and localization of video transcoding from AVC to HEVC (AVC-HEVC). There are two probable cases of AVC-HEVC transcoding – whole video transcoding and partial frame transcoding. However, the existing forensic methods only consider the detection of whole video transcoding, and they do not consider partial frame transcoding localization. In view of this, we propose a framewise scheme based on a convolutional neural network. First, we analyze that the essential difference between AVC-HEVC and HEVC is reflected in the high-frequency components of decoded frames. Then, the partition and location information of prediction units (PUs) are introduced to generate frame-level PU maps to make full use of the local artifacts of PUs. Finally, taking the decoded frames and PU maps as inputs, a dual-path network including specific convolutional modules and an adaptive fusion module is proposed. Through it, the artifacts on a single frame can be better extracted, and the transcoded frames can be detected and localized. Coupled with a simple voting strategy, the results of whole transcoding detection can be easily obtained. A large number of experiments are conducted to verify the performances. The results show that the proposed scheme outperforms or rivals the state-of-the-art methods in AVC-HEVC transcoding detection and localization.

Abstract: In general, manipulated videos will eventually undergo recompression. Video transcoding will occur when the standard of recompression is different from the prior standard. Therefore, as a special sign of recompression, video transcoding can also be considered evidence of forgery in video forensics. In this paper, we focus on the detection and localization of video transcoding from AVC to HEVC (AVC-HEVC). There are two probable cases of AVC-HEVC transcoding – whole video transcoding and partial frame transcoding. However, the existing forensic methods only consider the detection of whole video transcoding, and they do not consider partial frame transcoding localization. In view of this, we propose a framewise scheme based on a convolutional neural network. First, we analyze that the essential difference between AVC-HEVC and HEVC is reflected in the high-frequency components of decoded frames. Then, the partition and location information of prediction units (PUs) are introduced to generate frame-level PU maps to make full use of the local artifacts of PUs. Finally, taking the decoded frames and PU maps as inputs, a dual-path network including specific convolutional modules and an adaptive fusion module is proposed. Through it, the artifacts on a single frame can be better extracted, and the transcoded frames can be detected and localized. Coupled with a simple voting strategy, the results of whole transcoding detection can be easily obtained. A large number of experiments are conducted to verify the performances. The results show that the proposed scheme outperforms or rivals the state-of-the-art methods in AVC-HEVC transcoding detection and localization. Hadi Amirpour is a postdoc research fellow at ATHENA directed by Prof. Christian Timmerer. He received his B.Sc. degrees in Electrical and Biomedical Engineering, and he pursued his M.Sc. in Electrical Engineering. He got his Ph.D. in computer science from the University of Klagenfurt in 2022. He was appointed co-chair of Task Force 7 (TF7) Immersive Media Experience (IMEx) at the 15th Qualinet meeting. He was involved in the project EmergIMG, a Portuguese consortium on emerging imaging technologies, funded by the Portuguese funding agency and H2020. Currently, he is working on the ATHENA project in cooperation with its industry partner Bitmovin. His research interests are image processing and compression, video processing and compression, quality of experience, emerging 3D imaging technology, and medical image analysis.

Hadi Amirpour is a postdoc research fellow at ATHENA directed by Prof. Christian Timmerer. He received his B.Sc. degrees in Electrical and Biomedical Engineering, and he pursued his M.Sc. in Electrical Engineering. He got his Ph.D. in computer science from the University of Klagenfurt in 2022. He was appointed co-chair of Task Force 7 (TF7) Immersive Media Experience (IMEx) at the 15th Qualinet meeting. He was involved in the project EmergIMG, a Portuguese consortium on emerging imaging technologies, funded by the Portuguese funding agency and H2020. Currently, he is working on the ATHENA project in cooperation with its industry partner Bitmovin. His research interests are image processing and compression, video processing and compression, quality of experience, emerging 3D imaging technology, and medical image analysis.

Join us at the Institute of Science and Technology Austria (ISTA) in Vienna on June 21.

Join us at the Institute of Science and Technology Austria (ISTA) in Vienna on June 21. More than 60% of the internet data volume is video content consumed via streaming services like YouTube, Netflix, or Flimmit. We show how video streaming services work. It is essential that the quality of the videos is played back as optimally as possible on various end devices. With us, you can gain practical experience and get to know differences in the quality of perception. In particular, we will show an animation video of how video streaming actually works, and visitors will be able to experience videos of different qualities and run some exciting experiments.

More than 60% of the internet data volume is video content consumed via streaming services like YouTube, Netflix, or Flimmit. We show how video streaming services work. It is essential that the quality of the videos is played back as optimally as possible on various end devices. With us, you can gain practical experience and get to know differences in the quality of perception. In particular, we will show an animation video of how video streaming actually works, and visitors will be able to experience videos of different qualities and run some exciting experiments.

Bitmovin, founded by graduates and employees of the University of Klagenfurt, is a global leader in online video technology. The aim is to develop new technologies that will improve the video streaming experience in the future, for example, through smooth image quality. We will show you the latest achievements from ATHENA and how video streaming can become even more innovative in the future.

Bitmovin, founded by graduates and employees of the University of Klagenfurt, is a global leader in online video technology. The aim is to develop new technologies that will improve the video streaming experience in the future, for example, through smooth image quality. We will show you the latest achievements from ATHENA and how video streaming can become even more innovative in the future.