Klagenfurt, July 10, 2023

Congratulations to Dr. Jesús Aguilar Armijo for successfully defending his dissertation on “Multi-access Edge Computing for Adaptive Video Streaming” at Universität Klagenfurt in the context of the Christian Doppler Laboratory ATHENA.

Abstract

Over the last recent years, video streaming traffic has become the dominating service over mobile networks. The two main reasons for the growth of video streaming traffic are the improved capabilities of mobile devices and the emergence of HTTP Adaptive Streaming (HAS). Hence, there is a demand for new technologies to cope with the increasing traffic load while improving clients’ Quality of Experience (QoE). The network plays a crucial role in the video streaming process. One of the key technologies on the network side is Multi-access Edge Computing (MEC), which has several key characteristics: computing power, storage, proximity to the clients and access to network and player metrics. Thus, it is possible to deploy mechanisms at the MEC node that assist video streaming.

This thesis investigates how MEC capabilities can be leveraged to support video streaming delivery, specifically to improve the QoE, reduce latency or increase storage and bandwidth savings. This dissertation proposes four contributions:

- Adaptive video streaming and edge computing simulator: A simulator named ANGELA, HTTP Adaptive Streaming and Edge Computing Simulator, was designed to test mechanisms running at the edge node that support video streaming. ANGELA overcomes some issues with state-of-the-art simulators by offering: (i) access to radio and player metrics at the MEC node, (ii) different configurations of multimedia content (e.g., bitrate ladder or video popularity distribution), (iii) support for Adaptive Bitrate (ABR) algorithms at different locations of the network (e.g., server- based, client-based and network-based) and (iv) a wide variety of evaluation metrics. ANGELA uses real 4G/5G network traces to simulate the radio layer, which offers realistic results without simulating the complex processes of the radio layer. Testing a simple MEC mechanism scenario showed a simulation time decrease of 99.76% in ANGELA compared to the simulation using the state-of-the-art simulator ns-3.

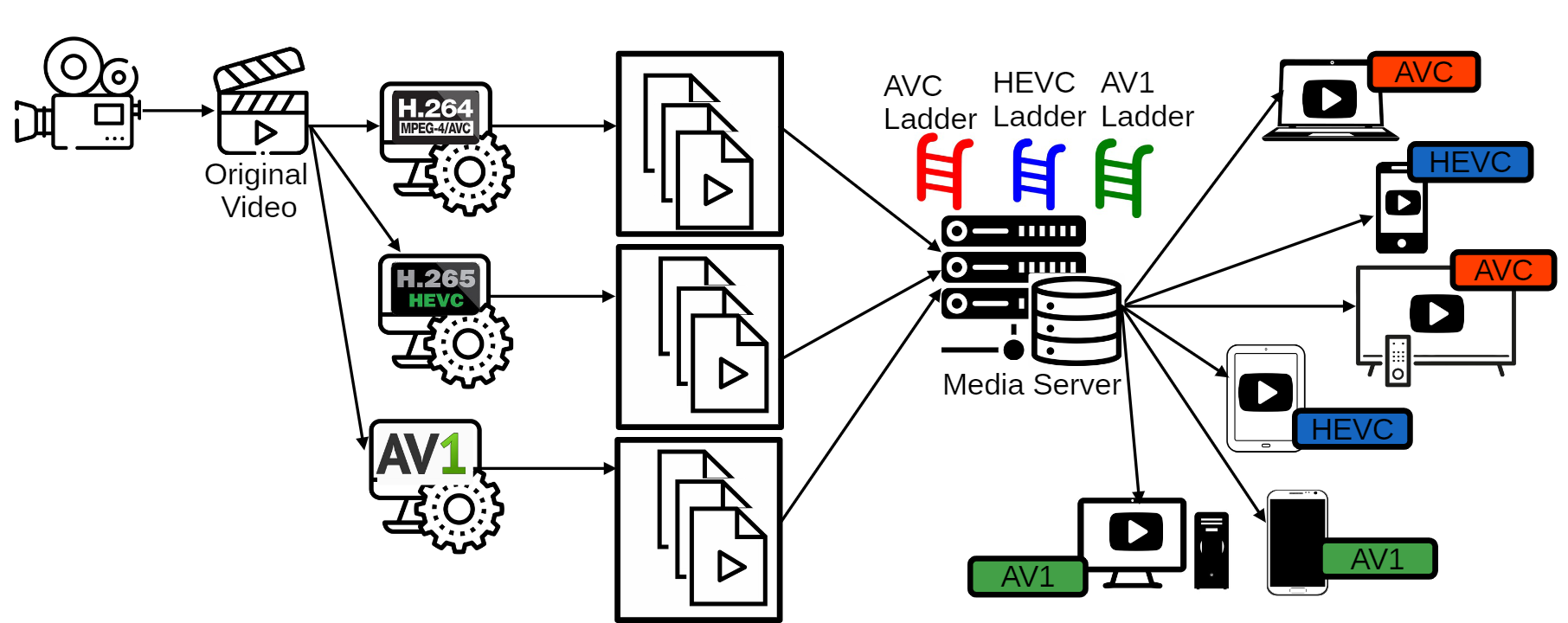

- Dynamic segment repackaging at the edge: Adaptive video streaming supports different media delivery formats such as HTTP Live Streaming (HLS) [11], Dynamic Adaptive Streaming over HTTP (MPEG-DASH), Microsoft Smooth Streaming (MSS) and HTTP Dynamic Streaming (HDS). This contribution proposes using the Common Media Application Format (CMAF) in the network’s backhaul, performing a repackaging to the clients’ requested delivery format at the MEC node. The main advantages of this approach are bandwidth savings at the network’s backhaul and reduced storage costs at the server and edge side. According to our measurements, the proposed model will also reduce delivery latency if the edge has more than 1.64 times the compute power per segment than the origin server, which is expected due to lower load.

- Edge-assisted adaptation schemes: The radio network and player metrics infor- mation available at the MEC node is leveraged to perform better adaptation decisions. Two edge-assisted adaptation schemes are proposed: EADAS, which improves ABR decisions on the fly to increase clients’ QoE and fairness, and ECAS-ML, which moves the whole ABR algorithm logic to the edge and manages the tradeoff among bitrate, segment switches and stalls to enhance QoE. To accomplish that, ECAS-ML utilizes machine learning techniques to analyze the radio network throughput and predict the algorithm parameters that provide the highest QoE. Our evaluation shows that EADAS enhances the performance of ABR algorithms, increasing the QoE by 4.6%, 23.5%, and 24.4% and the fairness by 11%, 3.4%, and 5.8% when using a buffer-based, a throughput-based, and a hybrid ABR algorithm, respectively. Moreover, ECAS-ML shows a QoE increase of 13.8%, 20.85%, 20.07% and 19.29% against a buffer-based, throughput-based, hybrid-based and edge-based ABR algorithm, respectively.

- Segment prefetching and caching at the edge: Segment prefetching is a technique that consists of transmitting future video segments to a location closer to the client before they are requested. Hence, the segments are served with reduced latency. The MEC node is an ideal location for performing segment prefetching and caching due to its proximity to the client, its access to radio and player metrics and its storage and computing capabilities. Several segment prefetching policies that use different types and amounts of resources and are based on different techniques, such as a Markov prediction model, machine learning, transrating (i.e., reducing segment bitrate/quality) or super-resolution, are proposed and evaluated. Moreover, the influence on segment prefetching of the caching policy, the bitrate ladder and the chosen ABR algorithm is studied. Results show that the segment prefetching based on machine learning increases the average bitrate by ≈46% while reducing the average number of stalls by ≈20% only increasing the extra bandwidth consumption by ≈6% regarding the baseline simulation with no segment prefetching. Other prefetching policies offer a different combination of performance enhancement and resource usage that can adapt to the service provider’s needs.

Each of these contributions focuses on a different aspect of content delivery for video streaming but can be used jointly to improve video streaming services using MEC capabilities.

EADAS and ECAS-ML can improve the quality adaptation decisions and enable segment prefetching compatibility without the throughput miscalculation issues of client- based ABR algorithms. Moreover, the dynamic repackaging mechanism can be used jointly with segment prefetching and edge-based adaptation schemes to increase bandwidth savings in the backhaul, which reduces the negative impact of some segment prefetching policies.