32nd European Signal Processing Conference (EUSIPCO 2024)

26-30 August 2024, Lyon, France

[PDF]

Yiying Wei (AAU, Austria), Hadi Amirpour (AAU, Austria), Ahmed Telili (INSA Rennes, France), Wassim Hamidouche (INSA Rennes, France), Guo Lu (Shanghai Jiao Tong University, China) and Christian Timmerer (AAU, Austria)

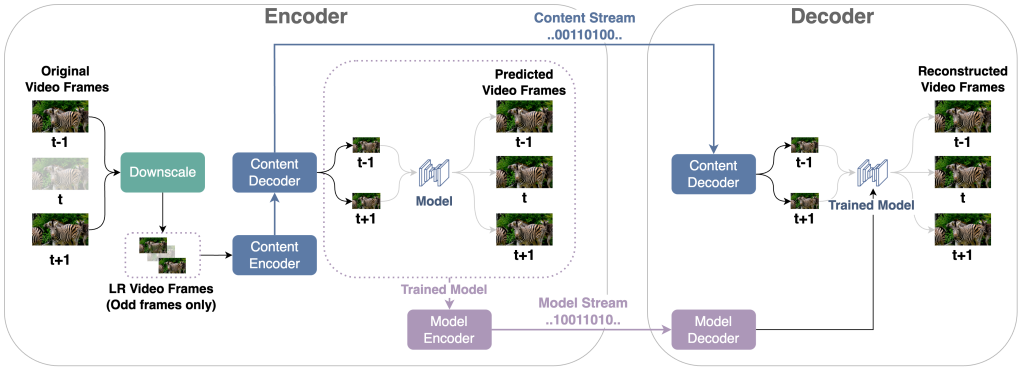

Abstract: Content-aware deep neural networks (DNNs) are trending in Internet video delivery. They enhance quality within bandwidth limits by transmitting videos as low resolution (LR) bitstreams with overfitted super-resolution (SR) model streams to reconstruct high-resolution (HR) video on the decoder end. However, these methods underutilize spatial and temporal re- dundancy, compromising compression efficiency. In response, our proposed video compression framework introduces spatial- temporal video super-resolution (STVSR), which encodes videos into low spatial-temporal resolution (LSTR) content and a model stream, leveraging the combined spatial and temporal reconstruction capabilities of DNNs. Compared to the state-of- the-art approaches that consider only spatial SR, our approach achieves bitrate savings of 18.71% and 17.04% while maintainingthe same PSNR and VMAF, respectively.