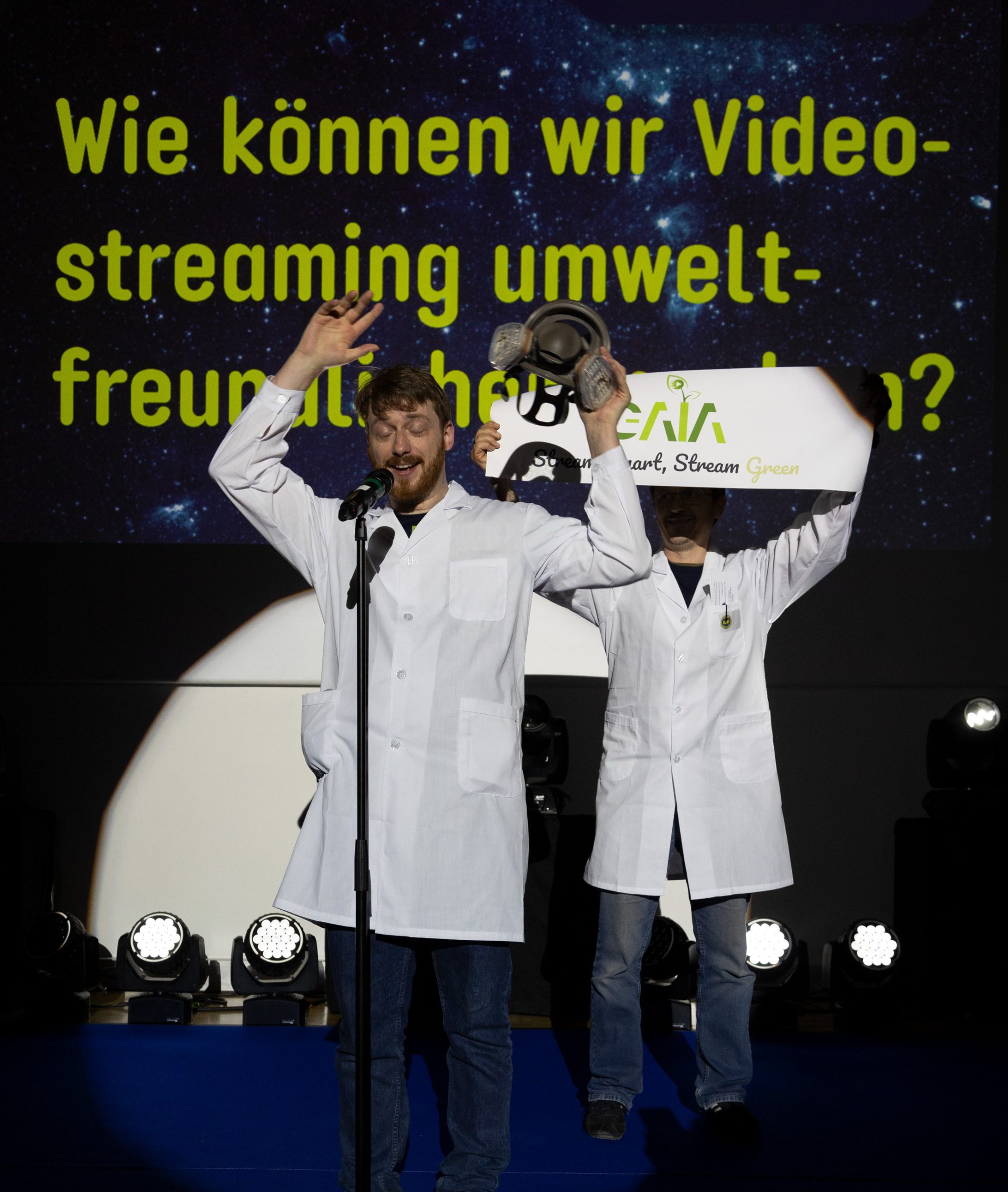

Energy Efficient Video Encoding for Cloud and Edge Computing Instances

11th FOKUS Media Web Symposium

11th JUN 2024 | Berlin, Germany

Abstract: The significant increase in energy consumption within data centers is primarily due to the exponential rise in demand for complex computing workflows and storage resources. Video streaming applications, which are both compute and storage-intensive, account for the majority of today’s internet services. To address this, this talk proposes a novel matching-based method designed to schedule video encoding applications on Cloud resources. The method optimizes for user-defined objectives, including energy consumption, processing time, cost, CO2 emissions, or a a trade-off between these priorities.

Samira Afzal is a postdoctoral researcher at Alpen-Adria-Universität Klagenfurt, Austria, and in collaborating with Bitmovin. Before, she was a postdoctoral researcher at the University of Sao Paulo, researching deeply on IoT, SWARM, and Surveillance Systems. She graduated with her Ph.D. in November 2019 from the University of Campinas (UNICAMP). During her Ph.D., she collaborated with Samsung on a project in the area of mobile video streaming over heterogeneous wireless networks and multipath transmission methods in order to increase perceived

Samira Afzal is a postdoctoral researcher at Alpen-Adria-Universität Klagenfurt, Austria, and in collaborating with Bitmovin. Before, she was a postdoctoral researcher at the University of Sao Paulo, researching deeply on IoT, SWARM, and Surveillance Systems. She graduated with her Ph.D. in November 2019 from the University of Campinas (UNICAMP). During her Ph.D., she collaborated with Samsung on a project in the area of mobile video streaming over heterogeneous wireless networks and multipath transmission methods in order to increase perceived

video quality. Further information is available here.