2023 NAB Broadcast Engineering and Information Technology (BEIT) Conference

April 15-19, 2023 | Las Vegas, US

[PDF]

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Prajit T Rajendran (Universite Paris-Saclay), Christian Feldmann (Bitmovin, Klagenfurt), Martin Smole (Bitmovin, Klagenfurt), Klaus Schoeffmann (Alpen-Adria-Universität Klagenfurt), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

Abstract:

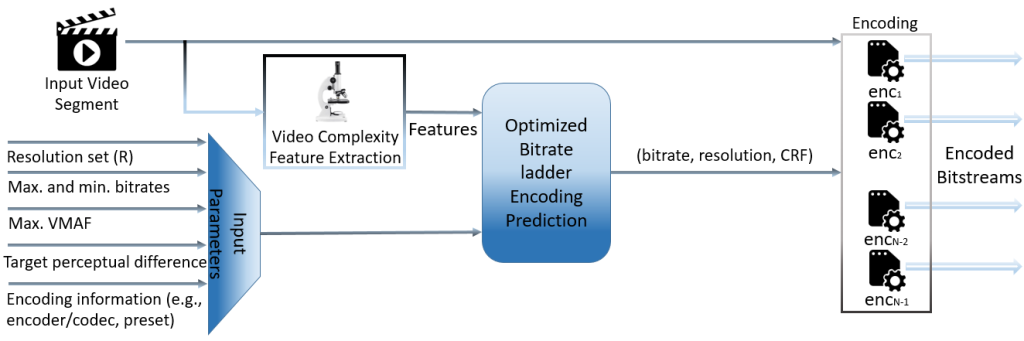

Currently, a fixed set of bitrate-resolution pairs termed bitrate ladder is used in live streaming applications. Similarly, two-pass variable bitrate (VBR) encoding schemes are not used in live streaming applications to avoid the additional latency added by the first-pass. Bitrate ladder optimization is necessary to (i) decrease storage or delivery costs or/and (ii) increase Quality of Experience (QoE). Using two-pass VBR encoding improves compression efficiency, owing to better encoding decisions in the second-pass encoding using the first-pass analysis. In this light, this paper introduces a perceptually-aware constrained Variable Bitrate (cVBR) encoding Scheme (Live VBR) for HTTP adaptive streaming applications, which includes a joint optimization of the perceptual redundancy between the representations of the bitrate ladder, maximizing the perceptual quality (in terms of VMAF) and optimized constant rate factor (CRF). Discrete Cosine Transform (DCT)-energy-based low-complexity spatial and temporal features for every video segment, namely, brightness, spatial texture information, and temporal activity, are extracted to predict perceptually-aware bitrate ladder for encoding. Experimental results show that, on average, Live VBR yields bitrate savings of 7.21% and 13.03% to maintain the same PSNR and VMAF, respectively, compared to the reference HTTP Live Streaming (HLS) bitrate ladder Constant Bitrate (CBR) encoding using x264 AVC encoder without any noticeable additional latency in streaming, accompanied by a 52.59% cumulative decrease in storage space for various representations, and a 28.78% cumulative decrease in energy consumption, considering a perceptual difference of 6 VMAF points.

The encoding pipeline using Live VBR envisioned in this paper.