VCIP 2023

Monday, Dec4, 2023

https://vcip2023.iforum.biz/page/goto

Lectures:

- Hadi Amirpour, AAU, AT

- Christian Timmerer, AAU, AT

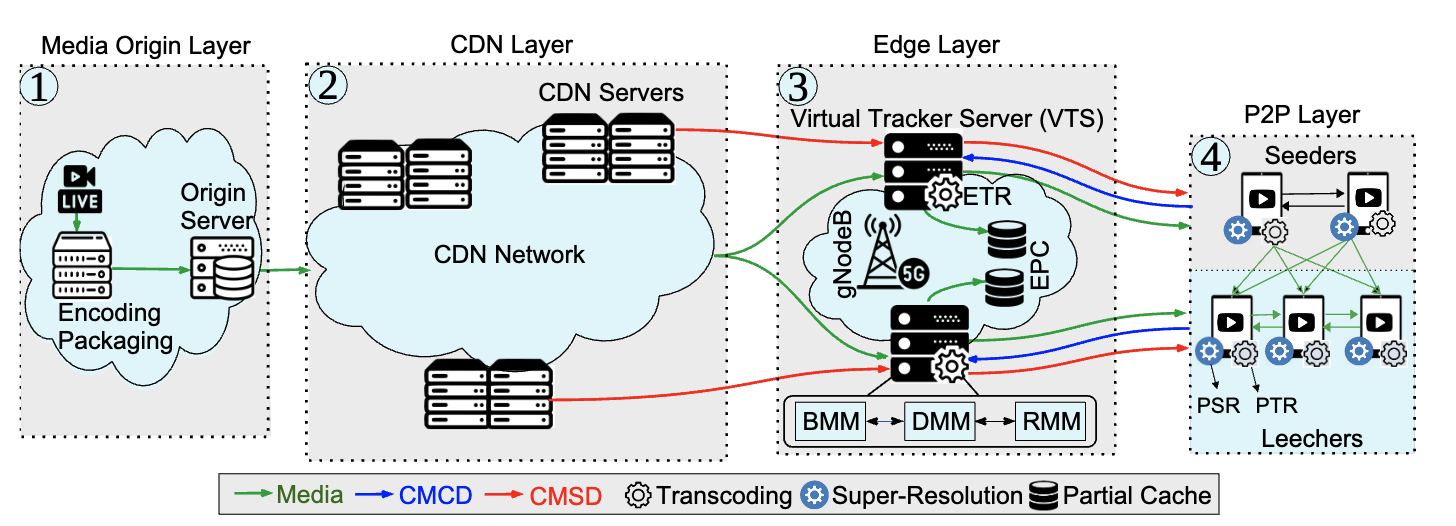

Abstract: The applications of video streaming are the primary drivers of Internet traffic, as over 82% of IP traffic in 2022. HTTP Adaptive Streaming (HAS) is the prevailing technology to stream live and video-on-demand (VoD) content over the Internet. In HAS, firstly, the video is split into smaller chunks, referred to as segments, each characterized by a short playback time interval. Segments are then encoded at multiple representations (bitrate-resolution pairs), referred to as the bitrate ladder. The clients select the highest possible quality representation for the next segment, which adapts to the current network condition and device type. In this way, HAS enables the continuous delivery of live and VoD content over the Internet. As the demand for video streaming applications is on the rise, new generations of video codecs and video content optimization algorithms are being developed. This tutorial first presents a detailed overview of video codecs, in particular the most recent standard video codec, i.e., Versatile Video Coding (VVC). On top of the video codecs, per-title encoding methods, which optimize bitrate ladders over characteristics of videos, are then introduced. It will be presented how representations are selected in a way that bitrate ladders are optimized over various dimensions, including spatial resolution, frame rate, energy consumption, device type, and network condition. Next, novel methods that optimize bitrate ladders for live video streaming without adding noticeable latency are introduced. Finally, fast multi-rate encoding approaches that reduce the encoding time and cost are introduced.