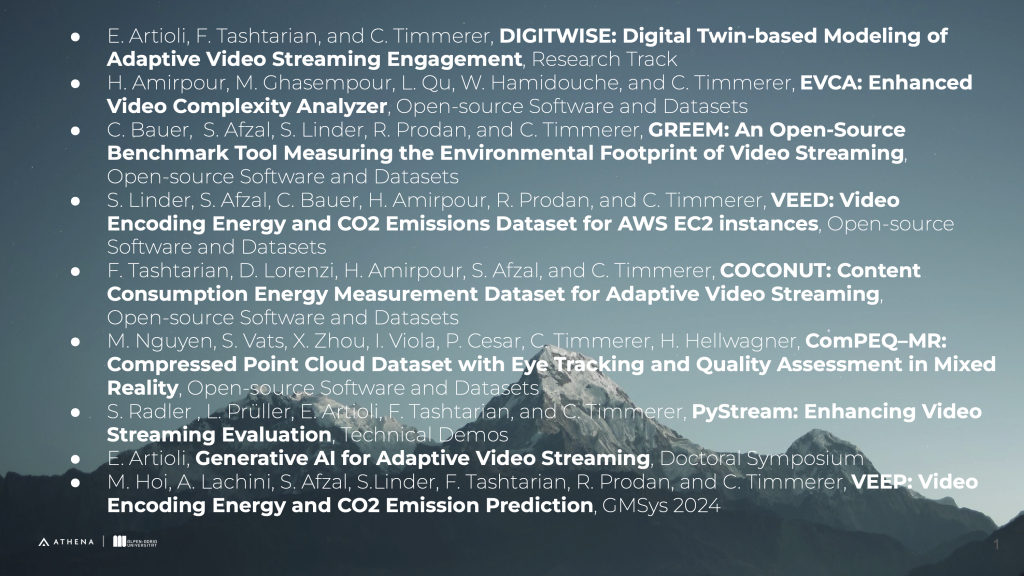

15th ACM Multimedia Systems Conference (MMSys)

15 – 18 April 2024 | Bari, Italy

- E. Artioli, F. Tashtarian, and C. Timmerer, DIGITWISE: Digital Twin-based Modeling of Adaptive Video Streaming Engagement, Research Track

- H. Amirpour, M. Ghasempour, L. Qu, W. Hamidouche, and C. Timmerer, EVCA: Enhanced Video Complexity Analyzer, Open-source Software and Datasets

- C. Bauer, S. Afzal, S. Linder, R. Prodan, and C. Timmerer, GREEM: An Open-Source Benchmark Tool Measuring the Environmental Footprint of Video Streaming, Open-source Software and Datasets

- S. Linder, S. Afzal, C. Bauer, H. Amirpour, R. Prodan, and C. Timmerer, VEED: Video Encoding Energy and CO2 Emissions Dataset for AWS EC2 instances, Open-source Software and Datasets

- F. Tashtarian, D. Lorenzi, H. Amirpour, S. Afzal, and C. Timmerer, COCONUT: Content Consumption Energy Measurement Dataset for Adaptive Video Streaming, Open-source Software and Datasets

- M. Nguyen, S. Vats, X. Zhou, I. Viola, P. Cesar, C. Timmerer, H. Hellwagner, ComPEQ–MR: Compressed Point Cloud Dataset with Eye Tracking and Quality Assessment in Mixed Reality, Open-source Software and Datasets

- S. Radler , L. Prüller, E. Artioli, F. Tashtarian, and C. Timmerer, PyStream: Enhancing Video Streaming Evaluation, Technical Demos

- E. Artioli, Generative AI for Adaptive Video Streaming, Doctoral Symposium

- M. Hoi, A. Lachini, S. Afzal, S.Linder, F. Tashtarian, R. Prodan, and C. Timmerer, VEEP: Video Encoding Energy and CO2 Emission Prediction, GMSys 2024